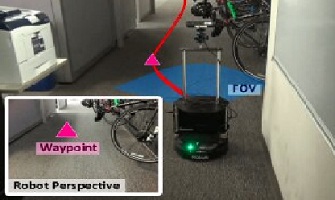

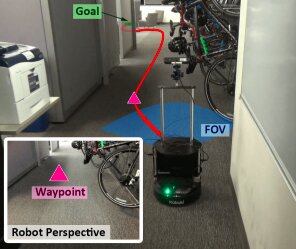

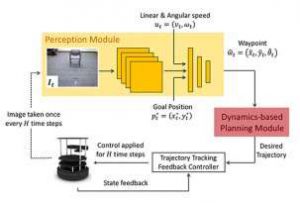

The researchers consider the problem of navigation from a start position to a goal position. Their approach (WayPtNav) consists of a learning based perception module and a dynamics model-based planning module. The perception module predicts a waypoint based on the current first-person RGB image observation. This waypoint is used by the model-based planning module to design a controller that smoothly regulates the system to this waypoint. This process is repeated for the next image until the robot reaches the goal.

The researchers consider the problem of navigation from a start position to a goal position. Their approach (WayPtNav) consists of a learning based perception module and a dynamics model-based planning module. The perception module predicts a waypoint based on the current first-person RGB image observation. This waypoint is used by the model-based planning module to design a controller that smoothly regulates the system to this waypoint. This process is repeated for the next image until the robot reaches the goal.

Researchers at UC Berkeley and Facebook AI Research have recently developed a new approach for robot navigation in unknown environments. Their approach, presented in a paper pre-published on arXiv, combines model-based control techniques with learning-based perception.

Model-based control is a popular paradigm for robot navigation because it can leverage a known dynamics model to efficiently plan robust robot trajectories. However, it is challenging to use model-based methods in settings where the environment is a priori unknown and can only be observed partially through on-board sensors on the robot.

The development of tools that allow robots to navigate surrounding environments is a key and ongoing challenge in the field of robotics. In recent decades, researchers have tried to tackle this problem in a variety of ways.

The control research community has primarily investigated navigation for a known agent (or system) within a known environment. In these instances, a dynamics model of the agent and a geometric map of the environment it will be navigating are available, hence optimal-control schemes can be used to obtain smooth and collision-free trajectories for the robot to reach a desired location.

These schemes are typically used to control a number of real physical systems, such as airplanes or industrial robots. However, these approaches are somewhat limited, as they require explicit knowledge of the environment that a system will be navigating. In the learning research community, on the other hand, robot navigation is generally studied for an unknown agent exploring an unknown environment. This means that a system acquires policies to directly map on-board sensor readings to control commands in an end-to-end manner.

These approaches can have several advantages, as they allow policies to be learned without any knowledge of the system and the environment it will be navigating. Nonetheless, past studies suggests that these techniques do not generalize well across different agents. In addition, learning such policies often requires a vast number of training samples.

To read more about this article please click on bellow link: